Generative AI in Software Testing: The Ultimate QA Guide

If you’ve ever felt the pressure of a looming release date while staring down a massive list of manual test cases, you know the struggle. But what if you could change that narrative? That’s where generative AI in software testing comes in, fundamentally shifting the focus from tedious, repetitive scripting to intelligent, automated creation. Think of it as an expert co-pilot for your entire QA lifecycle.

If you’d like a quick understanding on how Generative AI influences industries, here’s an insightful read.

The New Frontier in Quality Assurance

Generative AI isn’t just another automation tool on the pile; it’s more like a creative partner for your team. Instead of just running scripts someone else wrote, it can actually understand your project’s requirements, user stories, and even visual mockups to build comprehensive test scenarios from the ground up.

Imagine this: you describe a user login process in plain English, and moments later, you have a full suite of ready-to-run tests. That’s the real power it brings to the table. It tackles some of the oldest headaches in QA—brittle tests that break with every minor UI tweak, manual bottlenecks that slow everyone down, and the struggle to find good data for all those tricky edge cases.

By automating the most draining parts of test creation, it frees up your skilled QA engineers to focus on what humans do best: strategic thinking, exploratory testing, and complex problem-solving.

A Strategic Shift From Manual to Intelligent

Adopting generative AI is about more than just new software; it’s a completely different way of thinking about quality. It gives teams the ability to get much broader test coverage far more quickly and efficiently than ever before. For example, some tools are already seeing an incredible 84% first-run success rate when generating tests directly from requirements or wireframes. This means teams are cutting their test development time by up to 9x, compressing what once took weeks into just a few seconds. You can learn more about these AI testing advancements to grasp the full scope.

To see this difference in action, let’s compare the old way of doing things with the new, AI-powered approach.

How Generative AI Is Upgrading Software Testing

| Testing Aspect | The Traditional Way | The Generative AI Way |

|---|---|---|

| Test Case Creation | Manually written line-by-line based on requirements. Slow and prone to human error. | Automatically generated from plain text, user stories, or UI mockups. Incredibly fast and consistent. |

| Test Data Generation | Manual creation of limited, often repetitive data sets. Time-consuming to cover edge cases. | Intelligently creates diverse, realistic data on the fly, easily covering countless scenarios. |

| Test Maintenance | Scripts break with UI changes, requiring significant manual effort to fix and update. | Self-healing tests adapt to minor application changes, drastically reducing maintenance overhead. |

| Finding Bugs | Relies on the tester’s intuition and predefined paths. Can miss unexpected defects. | Uncovers hidden bugs by exploring application paths and user flows that humans might overlook. |

| Overall Speed | A bottleneck. Testing can’t keep pace with rapid development cycles. | An accelerator. Testing keeps up with agile and DevOps pipelines, enabling faster releases. |

The table makes it clear: this isn’t just a minor improvement. It’s a complete overhaul of the process.

By intelligently generating tests, data, and code, generative AI doesn’t just make testing faster—it makes it smarter. It helps uncover defects earlier, reduces maintenance overhead, and ultimately contributes to a higher-quality product delivered to market more quickly.

This transition allows businesses to finally keep pace with demanding release schedules without cutting corners on quality. Working with an expert AI solutions partner can help you integrate this technology smoothly, turning its potential into real business results and a serious competitive edge. By stepping into this new frontier, you’re setting your organization up to build better, more reliable software.

Putting Generative AI to Work in Your Testing Lifecycle

Knowing what generative AI can do in theory is one thing, but seeing it in action is where it gets really interesting. This is where the abstract concepts become practical tools that can genuinely reshape how we approach quality assurance. We’re not just talking about making old methods faster; we’re talking about unlocking new capabilities that were simply out of reach before.

Think of generative AI as a force multiplier for your QA team. It takes on the tedious, repetitive work that eats up so much time, freeing up your testers to focus on what humans do best: strategic thinking, complex problem-solving, and creative exploratory testing. Let’s dig into the specific areas where this technology is already making a huge difference.

Generating Comprehensive Test Cases from Requirements

The traditional way of creating test cases is a grind. A QA engineer pores over dense requirements documents, trying to translate every detail into individual tests. It’s slow, mind-numbing, and leaves plenty of room for misinterpretation or missed scenarios.

Generative AI completely flips this on its head.

You can now feed a large language model (LLM) your user stories, design mockups, or technical specs, and it will generate a comprehensive test suite for you. The AI reads and understands the intended functionality—from both text and images—and then crafts test cases that cover the happy path, negative scenarios, and tricky edge cases that a human might easily overlook.

Imagine you’re testing an e-commerce checkout. You could give the AI a simple prompt like this:

“Generate detailed test cases for a checkout process that includes adding items to a cart, applying a discount code, entering shipping information, selecting a payment method, and confirming the order. Include tests for invalid discount codes, out-of-stock items, and failed payment authorizations.”

What you get back is a structured set of ready-to-use tests that can be dropped right into your test management tool. This can save dozens of hours of manual effort and helps us achieve much better test coverage, much faster.

Creating Realistic and Diverse Synthetic Data

Getting the right test data is a classic QA headache. Production data is a goldmine of real-world scenarios, but it’s loaded with sensitive user information, a major compliance risk. On the other hand, data created by hand is often too simple and fails to reflect the complexity of real user behavior.

This is where synthetic data generation comes in. Generative AI can analyze the patterns and statistical properties of your production data and then create a brand new, completely artificial dataset. It looks and feels real, but without containing a single piece of actual user information.

This is a game-changer for a few reasons:

- Covering Edge Cases: You can tell the AI to generate data for those rare but critical situations, like user profiles with special characters, transactions with unusually high values, or accounts with convoluted order histories.

- Ensuring Privacy: Synthetic data is completely safe to use and compliant with regulations like GDPR and HIPAA because it contains zero personally identifiable information (PII).

- Load and Performance Testing: Need to simulate 10,000 users hitting your site at once? You can generate massive volumes of realistic data to stress-test your systems and find performance bottlenecks before your customers do.

Writing and Maintaining Test Automation Code

Test automation has been around for years, but writing and maintaining the scripts is still a major time sink. Now, generative AI tools like GitHub Copilot are acting as smart coding partners for QA engineers.

These AI assistants can:

- Generate Boilerplate Code: Give it a simple description in plain English, and it will write the basic framework for your test scripts in Python, Java, or JavaScript.

- Refactor Existing Scripts: The AI can analyze your current test code and suggest smart ways to make it cleaner, more efficient, and easier to maintain.

- Implement Self-Healing: This is a big one. When a developer changes a UI element, like a button ID, it usually breaks the test script. AI can detect that broken locator and automatically suggest or apply a fix. This dramatically cuts down on the constant, frustrating work of test maintenance. As we explored in our guide, preparing your business with generative AI integration services is key to leveraging these capabilities.

Intelligent Bug Detection and Triage

The final stretch of any testing cycle is all about finding, logging, and prioritizing bugs. Generative AI helps streamline this by sifting through application logs, crash reports, and even user feedback to spot anomalies that signal a potential defect.

But it doesn’t stop at just finding the bug. The AI can also:

- Predict Severity: By comparing a new issue to historical bug data, it can make an educated guess about its potential impact and assign a priority level.

- Suggest Root Causes: It can analyze technical details like stack traces to give developers a head start on figuring out what went wrong.

- Automate Assignment: Based on the part of the application that’s affected, the AI can automatically assign the bug ticket to the right development team. This gets rid of the manual triage step and gets the fix underway much faster.

A Practical Roadmap for AI Integration in QA

Bringing generative AI into your software testing isn’t about flipping a switch and hoping for the best. It’s a gradual, thoughtful process. The most successful teams don’t start with a massive, company-wide mandate; they begin with a single, well-chosen pilot project to learn the ropes, show some early wins, and build momentum.

The whole idea is to break this down into clear, manageable steps. This is the same way we approach our AI development services, focusing on practical strategies that fit into how you already work. By starting small, you can integrate these powerful tools without throwing your team or your release schedule into chaos.

Step 1: Identify the Perfect Pilot Project

Your first move is the most important one: pick a pilot project that’s small enough to manage but big enough to matter. You’re looking for a pain point in your testing cycle—something manual, repetitive, and frankly, a bit of a drag for your team.

Good candidates for a pilot often pop up in a few common areas:

- Repetitive Regression Suites: Think about a stable part of your application where regression tests are a constant, mind-numbing task.

- Data-Intensive Features: Is there a feature that needs tons of different and complex data sets that are a headache to generate by hand?

- New API Endpoints: Use generative AI to quickly spin up a solid set of functional and security tests for a brand-new API.

By zeroing in on one specific area, you give your team a safe space to experiment, learn, and gain confidence. The key is to set clear goals. Don’t just “try AI”—aim to cut test creation time for that module by 40% or boost its test coverage from 70% to a much healthier 90%.

Step 2: Understand the Technical Architecture

So, how does this actually work? Integrating generative AI into your QA pipeline usually means connecting a Large Language Model (LLM) to the tools you already use every day. This connection is almost always made through APIs, which serve as the messenger between the AI model and your development environment.

A typical workflow looks something like this:

- A QA engineer writes a detailed instruction (a prompt) in their test management tool or IDE.

- An API call shoots that prompt over to a generative AI model, like one from OpenAI, Anthropic, or even a private model hosted in-house.

- The model gets to work and sends back the requested test cases, test data, or automation code.

- That output is then piped directly into your CI/CD tools—think Jenkins, GitLab, or Azure DevOps—ready to be executed automatically.

This kind of setup means the AI fits into your world, supercharging what your team can do instead of forcing everyone to learn a completely new system from scratch. To see how this fits into the bigger picture, as we explored in our guide, check out how to apply AI for software development.

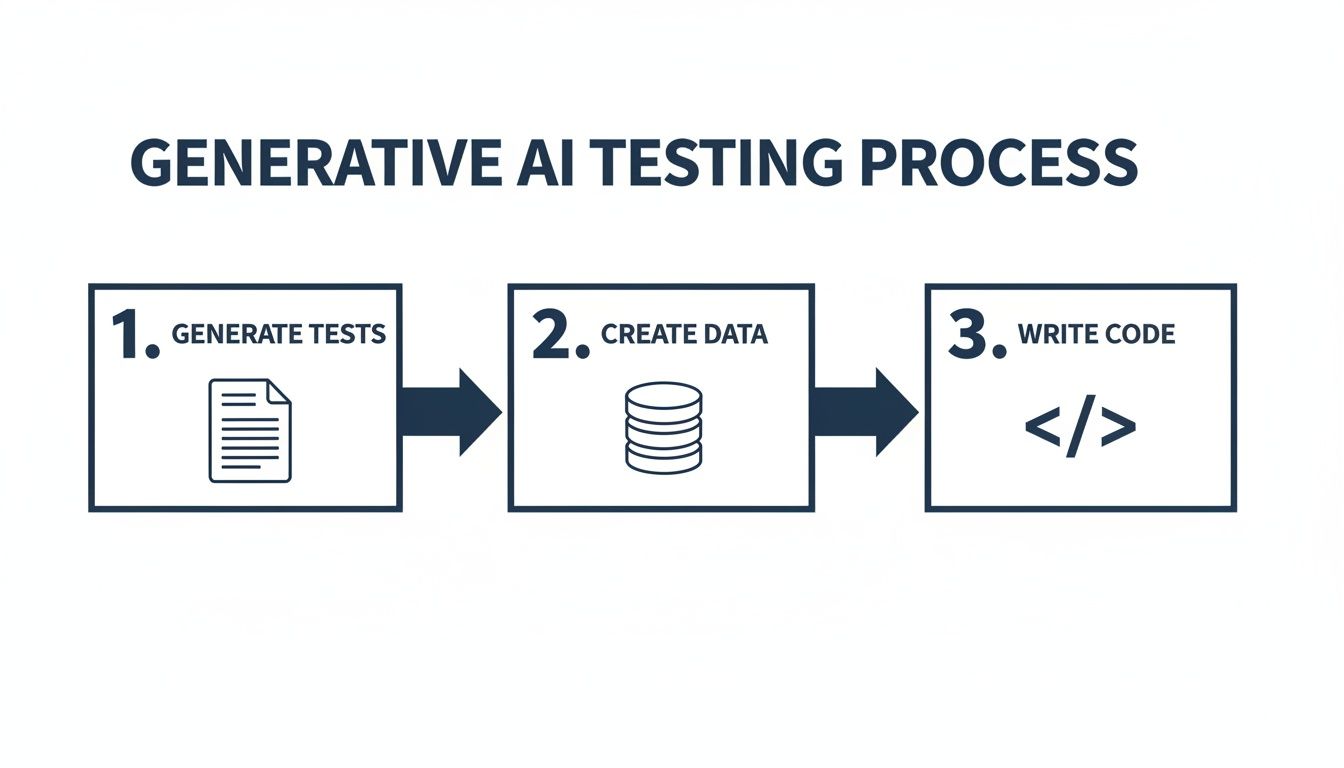

This simple diagram breaks down the core process into three fundamental actions.

You can see how these three steps create a tight, automated loop that speeds up the entire testing cycle.

Step 3: Master the Art of Prompt Engineering

Here’s the thing about working with AI: the quality of what you get out is directly tied to the quality of what you put in. This is where prompt engineering becomes the new essential skill for any modern QA team. It’s less about coding and more about clear communication—learning how to ask the AI for exactly what you need.

A well-crafted prompt is like giving a detailed blueprint to a master builder. It provides context, sets boundaries, and spells out the desired outcome, leaving no room for guesswork.

A vague request like, “Test the login page,” will get you a vague, unhelpful answer. A powerful prompt is all about the details:

“Generate five negative test cases for our login form using Selenium and Java. The form has ‘username’ and ‘password’ fields and a ‘submit’ button. I need tests for an incorrect password, a non-existent username, empty fields, and a locked account. Please format the final output as a JUnit test class.”

That level of specificity is what gets you code that’s immediately useful, correctly formatted, and perfectly aligned with what you’re trying to test. Your testers are no longer just executing tests; they’re becoming strategic directors, guiding a powerful AI to do the heavy lifting with precision.

Navigating the Challenges of AI-Generated Tests

Generative AI brings incredible speed to the testing process, but it’s not a silver bullet. If you’re going to use it effectively, you have to go in with a clear-eyed view of its current limitations. Thinking of it as a magic wand that creates perfect, production-ready tests is a recipe for disappointment. Acknowledging the hurdles is the first step toward building a truly robust, AI-assisted QA strategy.

The biggest issue right now is what I call the “confidence gap.” AI models are powerful, but they aren’t perfect, and their output absolutely requires human validation. One statistic really brings this home: more than 60% of AI-generated code has issues that a human has to fix. That’s a defect rate far higher than what we see in human-written code. On top of that, other surveys show over 70% of developers regularly rewrite AI-generated code before it goes anywhere near production.

This data isn’t a reason to ditch the technology. Far from it. It just highlights how critical human oversight is and why having an expert partner to help navigate these complexities makes all the difference.

The Critical Need for Validation

AI-generated tests can sometimes miss the subtle, nuanced understanding that comes from an experienced QA engineer. They might not grasp a tricky piece of business logic, misunderstand complex dependencies, or generate tests that are technically correct but practically useless. This is why a rigorous validation process is completely non-negotiable.

Simply put, you can’t just trust and deploy whatever an AI spits out. Every single test case, data set, and code snippet has to be reviewed and verified by a human expert.

Think of the AI as a brilliant but junior team member. It can crank out a massive volume of work in no time, but it still needs a senior engineer’s guidance to refine the output, catch mistakes, and make sure the final product actually aligns with the project’s goals.

This human-in-the-loop approach is key. It ensures the speed you gain from AI doesn’t come at the expense of quality or reliability. It’s about making your experts more powerful, not replacing them.

Understanding and Mitigating Hallucinations

A well-known quirk of large language models is their tendency to “hallucinate”—that is, to make up plausible-sounding but factually incorrect or nonsensical information. In the world of software testing, this could be a test that tries to click on a UI element that doesn’t exist, call a function that isn’t available, or assert an outcome that’s literally impossible.

These hallucinations can be a huge time-waster, sending engineers down a rabbit hole trying to debug a test that was flawed from the very beginning. To get around this, we use advanced techniques like Retrieval Augmented Generation (RAG). This approach essentially gives the AI a “cheat sheet” by grounding its responses in a specific set of trusted documents or code repositories, making it much less likely to invent details from thin air.

Ensuring Reliability in Regulated Industries

For industries like finance, healthcare, and automotive, software failures aren’t just an inconvenience—they can have serious legal, financial, and safety consequences. In these high-stakes environments, the reliability and consistency of every test are absolutely paramount. This is where a concept called “confidence-level testing” becomes essential.

Confidence-level testing involves a few key practices:

- Verifying AI Outputs: We establish strict protocols to validate every single piece of AI-generated test code against proven standards.

- Traceability: It’s crucial that every generated test can be traced back to a specific requirement. This is a must-have for compliance and audits.

- Consistency Checks: We run the AI models multiple times with the same prompt to ensure they produce stable, predictable results every time.

Successfully navigating these challenges requires a mature understanding of both the technology’s incredible potential and its very real pitfalls. As we cover in our guide on responsible AI implementation, building a solid framework for governance and validation is the key to using AI safely and effectively. Ultimately, the goal is to create a secure, future-ready system where AI acts as a trusted co-pilot on your quality journey.

Measuring the Real-World Impact on Your Bottom Line

For any business leader, a new technology has to answer one fundamental question: what’s the value? Forget the technical jargon for a moment. The real story of generative AI in software testing is how it affects your return on investment (ROI). Moving past the hype means focusing on the key performance indicators (KPIs) that directly impact your operational efficiency and, ultimately, your bottom line.

Bringing AI into your business isn’t a leap of faith; it’s a strategic decision that needs to be backed by clear results. We’re already seeing this play out on a massive scale. Some platforms are reporting up to an 88% reduction in test maintenance burdens. Others have clocked test creation speeds that are a staggering 9x faster than traditional manual methods.

Key Performance Indicators That Truly Matter

To build a solid business case, you have to track what matters. These KPIs are the bridge between the technical improvements happening in your software testing services and the business outcomes you report to stakeholders.

- Reduction in Test Creation Time: This is usually the first and most obvious win. When you can generate test cases automatically, work that once took days or weeks can now be done in minutes. That time saved is a direct reduction in labor costs and a major boost to your development velocity.

- Lower Test Maintenance Overhead: We’ve all been there—brittle tests that break with every minor change. They’re a constant drain on your team’s time and energy. AI’s self-healing capabilities can slash the effort needed to fix broken tests by over 80%. This frees up your best engineers to tackle complex problems instead of doing tedious rework.

- Improved Test Coverage: Humans are creatures of habit, and we can easily miss obscure edge cases. Generative AI is brilliant at exploring application paths and data combinations we might overlook. Bumping test coverage from a respectable 70% to over 95% means catching more bugs before they ever see the light of day, which is always cheaper than fixing them in production.

- Faster Bug Detection: Finding defects early in the cycle is the holy grail of QA. When AI can spot issues before a feature is even fully coded, it dramatically shortens the feedback loop for developers. This speed is a direct line to a faster time-to-market.

Connecting Metrics to Business Outcomes

These technical wins are great, but their true power comes from how they connect to core business goals. Each of these KPIs feeds a larger financial or strategic advantage for the entire organization.

The ultimate value of generative AI in testing isn’t just about doing the same things faster. It’s about fundamentally changing your capacity for innovation by removing the quality assurance bottleneck.

Getting your product into customers’ hands sooner gives you a powerful competitive edge. Slashing operational costs by reducing manual effort frees up budget that you can reinvest into new features or other strategic projects. This is exactly the kind of impact we help our partners achieve, as you can see in our client cases.

At the end of the day, adopting generative AI isn’t about buying another tool. It’s an investment in a more efficient, resilient, and competitive development engine—one that delivers a better product to your users, faster than ever before. This is how you turn technology buzz into a tangible, measurable return.

The Future of Testing is Autonomous and Intelligent

The move toward generative AI in software testing is more than just a technology upgrade; it’s a complete rethink of what quality assurance means. We are shifting from an era where QA engineers spent most of their time manually writing and checking scripts. The new reality sees them evolving into strategic quality architects, guiding and validating smart, automated systems.

This change unlocks a more proactive and predictive way to handle quality. It also makes the human element more important than ever. People can now focus on creative problem-solving and complex thinking, leaving the high-volume, repetitive work to AI.

Emerging Frontiers in Quality Assurance

The road ahead is filled with even more exciting developments. We’re on the verge of truly autonomous testing systems where AI doesn’t just help—it takes the lead. A big part of this evolution involves scaling with AI agents for business, which allows companies to manage increasingly complex testing workflows automatically.

Here are a couple of trends that are shaping this future:

- Self-Healing Test Automation: Picture a test suite that actually fixes itself. When a change to the UI breaks a test, an AI agent can pinpoint the problem, find the new element, update the script, and run the test again. No human intervention needed.

- Predictive Quality Analytics: Generative AI can comb through code repositories and past bug reports to predict where new problems are likely to pop up. It can flag tricky code sections that have a high chance of containing defects before a single test is run, helping teams use their resources much more effectively.

This future isn’t about replacing testers. It’s about giving them powerful cognitive tools. The goal is a partnership where human expertise directs AI-driven execution, achieving a level of efficiency and software quality we’ve never seen before.

Your Partner in the AI Transformation

Navigating this new world requires a partner who understands both AI and software engineering inside and out. As an experienced AI solutions partner, we specialize in building these advanced capabilities into real-world development cycles. Whether it’s through our custom software development or specific AI development services, our goal is always to deliver real, measurable business value.

Generative AI offers huge potential. It promises not just faster testing, but a smarter, more resilient, and more innovative way to build great software.

Ready to see how intelligent solutions can solve your unique challenges? Schedule an AI Discovery Workshop with our experts and start mapping out your path to the future of quality assurance.

Frequently Asked Questions (FAQ)

Stepping into generative AI for software testing is exciting, and naturally, it comes with a lot of questions. Let’s break down some of the most common ones we hear from QA teams and leaders.

How Is Generative AI Different From Traditional Test Automation?

Think of traditional test automation like a very precise, very obedient robot. You give it a detailed script—click here, type this, check that—and it executes those steps perfectly. The problem is, it’s rigid. A small UI change can break the whole script, and it only tests what you explicitly tell it to.

Generative AI is a different beast altogether. Instead of just following instructions, it creates. You can give it plain English requirements, and it will dream up brand-new test cases, generate complex and realistic test data, or even write the automation code for you. The QA professional shifts from being a script-writer to a strategist, guiding the AI and focusing on the bigger picture.

Will Generative AI Replace Human Testers?

Absolutely not. Think of it as the ultimate QA assistant, not a replacement. Generative AI is fantastic at handling the repetitive, time-consuming grunt work with incredible speed, but it doesn’t have a human’s intuition or deep-seated domain knowledge. It can’t “feel” when an application is clunky or have a spark of curiosity that leads to discovering a critical edge-case bug.

The future here is all about partnership. Your testers will guide the AI, validate its output, and be freed up to focus on what humans do best: complex exploratory testing, creative problem-solving, and strategic quality planning. Finding an experienced AI solutions partner can be a great way to build this hybrid human-AI model correctly from the start.

We’re Interested. How Do We Get Started?

The smartest way to begin is by thinking small. Don’t try to overhaul your entire QA process overnight. Instead, pick one specific, high-impact area for a pilot project. Maybe it’s a part of your application with notoriously poor test coverage or one that requires tons of manual data entry for testing.

Focus the AI on just that module. Use it to generate test cases or create synthetic data. This gives your team a safe space to learn and lets you score an early, measurable win that builds momentum. As we explored in our AI use case selection guide, choosing that first project wisely is half the battle. A structured AI Discovery Workshop can also help you map out a clear strategy and pick the right tools, which is a key part of our AI development services.

What New Skills Does My QA Team Need to Adapt?

The skill set is definitely evolving. While deep coding skills for writing test scripts from scratch become less critical, other abilities move to the forefront.

The most important skills are now strong analytical thinking and prompt engineering. Testers need to be able to critically evaluate what the AI produces and know how to write clear, concise instructions—the “prompts”—to get the best results.

At the end of the day, your team’s existing domain knowledge becomes even more valuable. They’re the ones who know your users and your business, and they’ll use that expertise to steer the AI toward creating tests that truly matter.

Ready to see how AI can sharpen your QA process and give you a real competitive advantage? Bridge Global provides expert guidance from strategy all the way to implementation. Discover our AI for your business advantage and let’s get started with a call.